COMPLiQ

The Global Standard for Secure, RESPONSIBLE AI Deployments

The Problem

Enterprises deploying AI face shadow usage, data leakage, compliance violations, and no audit trail. Traditional security has 7 layers-none address AI behavior.

The Process

Led a 13-person pod for 6 months building toward an AI Chief Compliance Officer. Hit external dependency wall when custodian APIs were blocked. Pivoted architecture to broader enterprise AI security market.

The Outcome

Live product at compliq.ai. Patent-pending scanner architecture. Successful pivot from narrow RIA focus to enterprise-wide AI compliance tool.

Design Decisions

| Decision | Why | Engineering Tradeoff |

|---|---|---|

| Model-agnostic API | Enterprises want flexibility and cannot lock to one LLM | Additional abstraction layer but future-proofs the platform |

| Trust calibration system | High confidence equals auto-pass, low equals human review | Requires confidence scoring infrastructure |

| Blockchain audit logs | Immutable proof for compliance audits | Added complexity but essential for enterprise trust |

STAR Summary

| Situation | Enterprises deploying AI faced a security gap: traditional 7-layer security models weren’t built for AI behavior. Shadow AI, prompt injection, data leakage through conversations, and zero audit trails created compliance risk-especially in regulated industries like financial services. |

| Task | Design and lead development of an AI compliance platform. Initially targeted RIAs (Registered Investment Advisors) with an “AI Chief Compliance Officer” that could monitor, audit, and ensure regulatory compliance. |

| Action | Led 13-person development pod. Designed patent-pending scanner architecture. Built trust calibration system. Wrote core scanner rule logic. When custodian API access was blocked after 6 months, pivoted from RIA-specific tool to enterprise-wide AI security platform. |

| Result | Live product at compliq.ai. Patent-pending architecture applicable across regulated industries. Successful pivot preserved 6 months of technical work while opening broader market. |

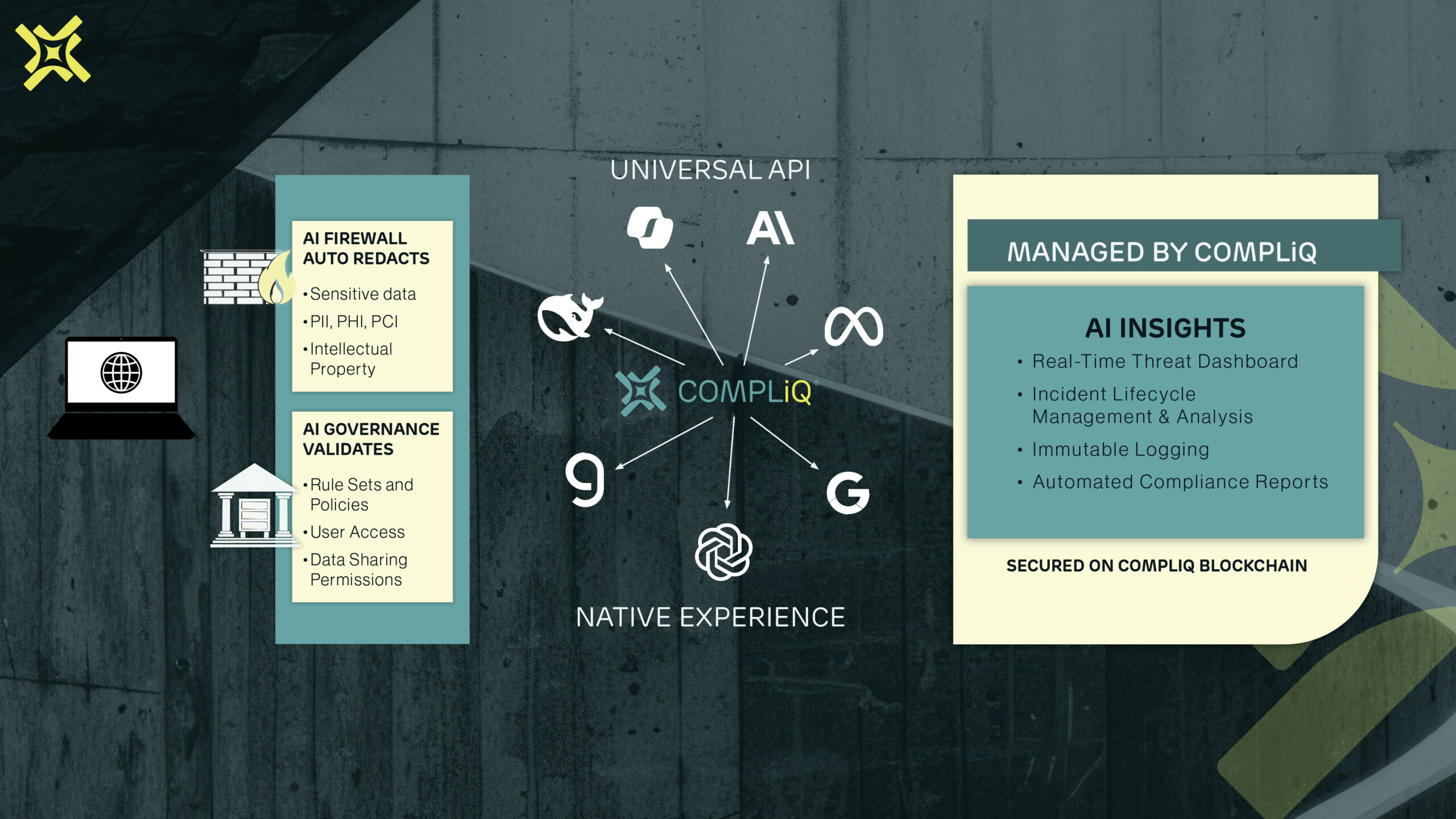

Multi-Layer Architecture

| Layer | Function |

|---|---|

| Pre-Screening | Quick boolean check on incoming data |

| Validation | L2/L3 distributed validation |

| AI Ensemble | Multiple micro-models for accuracy |

| Processing | Temporary Processing Model assembly |

| Audit | Immutable blockchain logging |

Every AI interaction is validated before processing and logged after completion.

The Pivot

| Phase | What Happened |

|---|---|

| 6 months building | Scanner architecture, trust calibration, blockchain audit logs |

| The blocker | Custodian APIs denied-RIA-specific vision couldn’t function |

| The pivot | Reframed from “RIA compliance” to “enterprise AI security” |

| The save | Modular, API-agnostic architecture preserved all technical work |

Lesson: External dependencies can invalidate months of work overnight. Build modular first.

Trust Calibration

| Confidence | Action | Rationale |

|---|---|---|

| 90%+ | Auto-pass + logging | Don’t slow obvious safe interactions |

| 70-90% | Soft alert + enhanced logging | Proceed with monitoring |

| < 70% | Human review | Compliance officer decides |

System learns from human decisions on edge cases, improving scoring over time.

AI-Assisted Rule Development

| Before AI | After AI |

|---|---|

| Legal SME → I translate → Review cycles | Legal SME → AI generates → SME refines → I validate |

| Days per rule | ~60% faster |

The pattern-AI generates, human validates-became core to how we build.

Pod Leadership

| Cadence | Focus |

|---|---|

| Daily | Scanner rule logic, architecture decisions, blocker resolution |

| Weekly | Pod coordination, stakeholder alignment, roadmap |

| Monthly | Architecture reviews, security audits, patent documentation |

13-person pod: Solution Architect, BA, PM, 10 developers. Bridging product requirements with technical implementation.

Gallery

Multi-layer system design showing data loop, file processing, primary/secondary libraries, and compliance scoring